基于Corosync和Pacemaker实现Web服务的高可用

Corosync+Pacemaker+iscsi+Httpd实现web服务的高可用

一、软件介绍

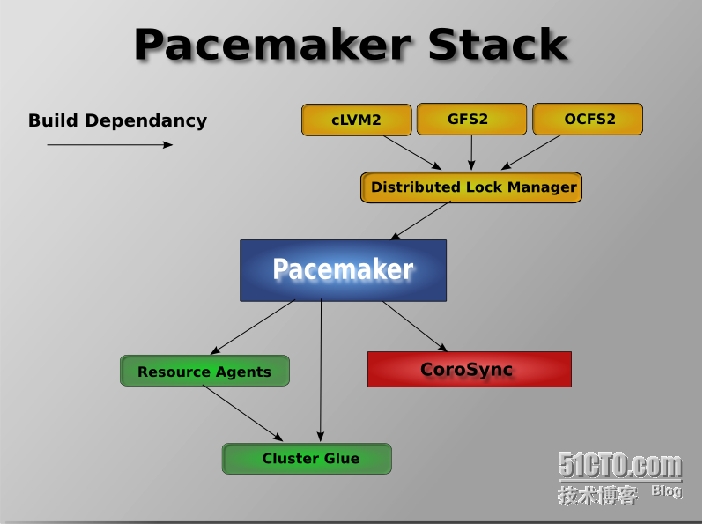

Corosync实现的是membership和可靠组通信协议

Pacemaker则基于Corosync/Linux-HA实现服务的管理

Corosync包括如下组件:

Totem protocol

EVS

CPG

CFG

Quorum

Extended Virtual Synchrony算法(EVS)提供两个功能:

组成员列表的同步;

组消息的可靠组播。

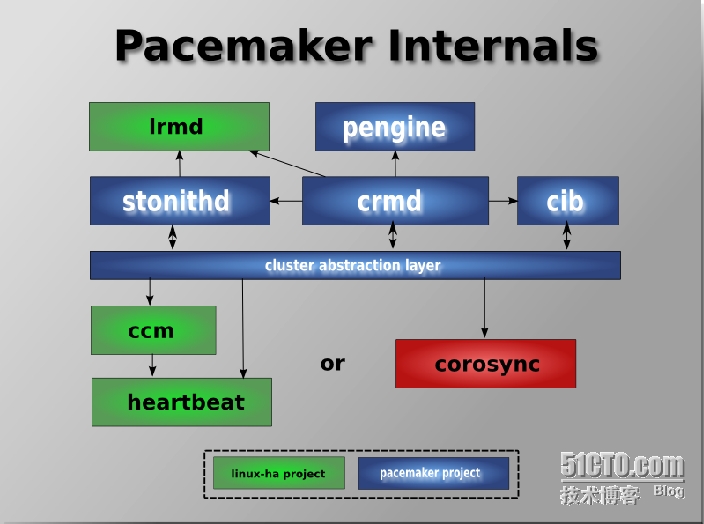

Pacemaker层次架构

Pacemaker本身由四个关键组件组成:

CIB ( 集群信息基础)

CRMd ( 集群资源管理守护进程)

PEngine ( PE or 策略引擎)

STONITHd (Shoot-The-Other-Node-In-The-Head 爆其他节点的头)

内部组件结构

二、软件的安装及web服务实现

实验环境:rhel6.5

KVM虚拟机 关闭防火墙 selinux disabled

两个节点node1.com node4.com

yum install corosync pacemaker -y

yum仓库不提供crm命令需要自己装

包名:crmsh-1.2.6-0.rc2.2.1.x86_64.rpm、pssh-2.3.1-2.1.x86_64.rpm

pssh-2.3.1-4.1.x86_64.rpm、python-pssh-2.3.1-4.1.x86_64.rpm

安装crmsh依赖与pssh包,pssh若选用2.3.1-4.1还需安装python-pssh-2.3.1-4.1

配置 Corosync

vi /etc/corosync/corosync.conf

totem {

version: 2

secauth: off

threads: 0

interface {

ringnumber: 0

bindnetaddr: 192.168.122.0 //设置成实验环境的网段

mcastaddr: 226.44.1.1 //集群使用多播,各节点需要一致

mcastport: 5405

ttl: 1

}

}

logging {

fileline: off

to_stderr: no

to_logfile: yes

to_syslog: yes

logfile:

/var/log/cluster/corosync.log

debug: off

timestamp: on

logger_subsys {

subsys: AMF

debug: off

}

}

amf {

mode: disabled

}

service { //添加

name: pacemaker

ver: 0

}

//When run in version 1 mode, the plugin does not start the Pacemaker daemons.

When run in version 0 mode, the plugin can start the Pacemaker daemons.两个节点上配置相同

/etc/init.d/corosync start //启动corosync服务

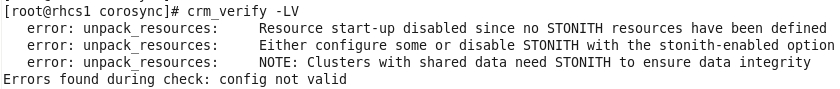

使用crm_verify -LV 先校验配置文件

pacemaker默认启用了stonith,而当前集群并没有相应的stonith设备,因此此默认配置目前尚不可用,这可以通过如下命令先禁用stonith:

crm(live)configure#crm configure property stonith-enabled=false

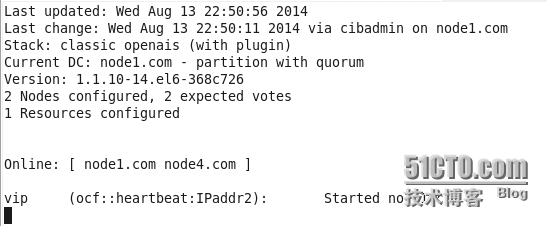

再用crm_verify -LV 命令查看就没有报错了,一个节点配置所有节点同步

添加资源vip

crm(live)configure# primitive vip ocf:heartbeat:IPaddr2 params ip=192.168.122.20 cidr_netmask=24 op monitor interval=30s crm(live)configure# commit crm(live)configure# show node node1.com node node4.com primitive vip ocf:heartbeat:IPaddr2 params ip="192.168.122.20" cidr_netmask="24" op monitor interval="30s" property $id="cib-bootstrap-options" dc-version="1.1.10-14.el6-368c726" cluster-infrastructure="classic openais (with plugin)" expected-quorum-votes="2" stonith-enabled="false"

当有半数以上的节点在线时,这个集群就认为自己拥有法定人数了,是“合法”的

crm(live)configure# property no-quorum-policy=ignore

//忽略达不到法定人数的情况,继续运行资源

提交成功后就可以在另外一个节点上使用命令crm_mon 查看资源

添加web服务资源website:

首先修改httpd配置文件

vi /etc/httpd/conf/httpd.conf //各个节点服务节点均得修改,大概在921行,去掉注释 <Location /server-status> SetHandler server-status Order deny,allow Deny from all Allow from 127.0.0.1 </Location>

crm(live)configure# primitive website ocf:heartbeat:apache params configfile=/etc/httpd/conf/httpd.conf op monitor interval=30s crm(live)configure# commit WARNING: website: default timeout 20s for start is smaller than the advised 40s WARNING: website: default timeout 20s for stop is smaller than the advised 60s

crm(live)configure# colocation website-with-vip inf: website vip

//将website和vip绑定在同一个节点上,不添加的话,两个资源会运行在不同的节点

crm(live)configure# location master-node website 10: node4.com //设置master主节点 crm(live)configure# commit

crm(live)configure# show node node1.com node node4.com primitive vip ocf:heartbeat:IPaddr2 params ip="192.168.122.20" cidr_netmask="24" op monitor interval="30s" primitive website ocf:heartbeat:apache params configfile="/etc/httpd/conf/httpd.conf" op monitor interval="30s" meta target-role="Started" location master-node website 10: node4.com colocation website-with-vip inf: website vip property $id="cib-bootstrap-options" dc-version="1.1.10-14.el6-368c726" cluster-infrastructure="classic openais (with plugin)" expected-quorum-votes="2" stonith-enabled="false" no-quorum-policy="ignore"

此时可以进行测试:打开备用节点上的监控crm_mon,再关闭主节点的corosync服务,可以看到资源会切换到备用节点,若master恢复,会切换回去,即集群的高可用

添加iscsi共享存储

首先在宿主机上建立共享存储

vi /etc/tgt/targets.conf <target iqn.2014-06.com.example:server.target1> backing-store /dev/vg_ty/ty //宿主机使用lvm存储 initiator-address 192.168.122.24 initiator-address 192.168.122.27 </target>

$ /etc/init.d/tgtd restart

$ tgt-admin -s //可以查看所有的目标主机

Type: disk SCSI ID: IET 00010001 SCSI SN: beaf11 Size: 2147 MB, Block size: 512 Online: Yes Removable media: No Prevent removal: No Readonly: No Backing store type: rdwr Backing store path: /dev/vg_ty/ty Backing store flags: Account information: ACL information: 192.168.122.24 192.168.122.27

两节点(虚拟机)上连接iscsi

[root@node1 ~]# iscsiadm -m discovery -p 192.168.122.1 -t st Starting iscsid: [ OK ] 192.168.122.1:3260,1 iqn.2014-06.com.example:server.target1 [root@node1 ~]# iscsiadm -m node -l Logging in to [iface: default, target: iqn.2014-06.com.example:server.target1, portal: 192.168.122.1,3260] (multiple) Login to [iface: default, target: iqn.2014-06.com.example:server.target1, portal: 192.168.122.1,3260] successful.

fdisk -l //即可看到新添加的磁盘,进行分区

fdisk -cu /dev/sda

mkfs.ext4 /dev/sda1 //格式化成ext4

mount /dev/sda1 /var/www/html

echo ‘node1.com‘ > /var/www/html/index.html

umount /dev/sda1

crm(live)configure# primitive webfs ocf:heartbeat:Filesystem params device=/dev/sda1 directory=/var/www/html fstype=ext4 op monitor interval=30s crm(live)configure# colocation webfs-with-website inf: webfs website //将webfs和website绑定在同一个节点上 crm(live)configure# order website-after-webfs inf: webfs website //设置启动顺序,先启动文件系统webfs,再启动服务website

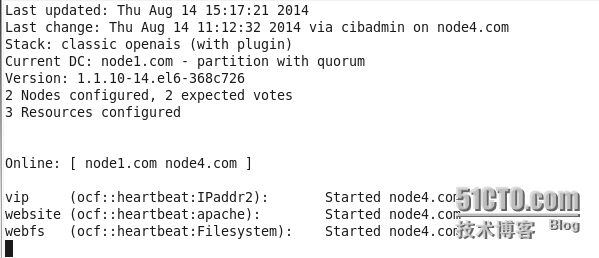

此时查看资源状况

服务运行在master node4.com上,当master宕机,资源会被备用节点node1.com接管。

本文出自 “划舞鱼” 博客,请务必保留此出处http://ty1992.blog.51cto.com/7098269/1539962

郑重声明:本站内容如果来自互联网及其他传播媒体,其版权均属原媒体及文章作者所有。转载目的在于传递更多信息及用于网络分享,并不代表本站赞同其观点和对其真实性负责,也不构成任何其他建议。